Let’s discuss the importance of data integration in today’s data-driven world and explore different techniques such as ETL, data pipelines, real-time processing, and cloud-based integration. This blog compares these approaches based on factors like processing mode, complexity, data latency, and scalability to help organizations make informed decisions about the best method for their specific needs.

In our increasingly data-driven world, enterprise organizations face significant challenges in integrating vast amounts of data from multiple sources and ensuring that data is accurate, timely, and accessible. A successful data integration strategy can streamline operations, improve decision-making, and drive innovation. However, choosing the right integration approach involves balancing different methods, tools, and technologies. Let’s dive into various data integration techniques, including Extract, Transform, Load (ETL), data pipelines, real-time processing, and cloud-based data integration, providing a comparative analysis to help enterprises select the most appropriate solution for their needs.

Understanding Data Integration in the Enterprise Landscape

Data integration refers to the process of combining data from different sources and making it available in a unified format to deliver a cohesive view of organizational information. This process is essential for organizations that rely on multiple systems, databases, and applications, such as CRM, ERP, and financial systems. Effective data integration ensures that data flows seamlessly across the enterprise, enhancing collaboration and enabling data-driven decisions.

At its core, data integration involves several key components:

- Data Mapping: The process of matching data fields between disparate data sources and the target system, ensuring that the right data is transferred and transformed correctly.

- Data Pipelines: The automated flow of data from one system to another, which may include data extraction, transformation, and loading, as well as monitoring and managing data flow over time.

- Data Quality: Ensuring that the integrated data is clean, accurate, and consistent to support high-quality decision-making.

In this context, several integration approaches can be employed depending on the organization’s specific requirements, infrastructure, and data sources.

Key Approaches to Data Integration

1. ETL (Extract, Transform, Load)

Extract, Transform, Load (ETL) is one of the oldest and most widely used data integration methods. It involves three stages:

- Extract: Data is pulled from multiple sources, such as databases, flat files, and APIs.

- Transform: Data is cleansed, enriched, and transformed to fit the target system’s format.

- Load: The transformed data is then loaded into the target data store, often a data warehouse or data lake.

Advantages of ETL (Extract, Transform, Load):

- Ensures consistency and accuracy of data by cleaning and transforming it before loading.

- Supports complex data transformations and business rules.

- Ideal for batch processing large volumes of data.

Disadvantages of ETL (Extract, Transform, Load):

- Traditional ETL processes can be slow, especially when handling large datasets or complex transformations.

- Real-time data integration can be difficult to implement, as ETL is typically designed for batch processing.

2. Data Pipelines

Data pipelines automate the flow of data between systems in a seamless and scalable manner. A data pipeline can include processes such as ETL, but it is more focused on automating the flow of data continuously or on-demand. Data pipelines can also support both batch processing and real-time processing.

Advantages of Data Pipelines:

- Scalable for large datasets and diverse data sources.

- Supports both batch and real-time data integration.

- Can be cloud-based, offering flexibility and ease of deployment.

Disadvantages of Data Pipelines:

- Complexity in managing and maintaining the pipeline, especially with real-time data.

- Data quality issues may arise if proper validation and monitoring mechanisms are not in place.

3. Real-Time Processing

Real-time processing involves capturing and processing data as it is generated, providing immediate insights and updates. This approach is ideal for organizations that need to respond to events in real-time, such as e-commerce transactions, financial trading, or sensor data from IoT devices.

Advantages of Real-Time Processing:

- Enables immediate data insights, supporting rapid decision-making.

- Enhances the responsiveness of business processes, such as customer service or fraud detection.

- Can be integrated with technologies like Change Data Capture (CDC) to monitor changes in source systems and sync them to target systems in real-time.

Disadvantages of Real-Time Processing:

- Requires significant infrastructure and resources to handle high volumes of data in real-time.

- Complexity in ensuring data quality and consistency when handling fast-moving streams of data.

4. Change Data Capture (CDC)

Change Data Capture (CDC) is a technique used to identify and capture changes made to data in source systems. These changes can then be propagated to downstream systems, ensuring that target systems reflect the latest updates without having to perform full data extracts.

Advantages of Change Data Capture (CDC):

- Minimizes the need for full data extracts by only capturing changes, reducing the load on systems.

- Supports both batch and real-time data replication.

- Reduces data latency, ensuring that target systems stay up-to-date with the latest changes.

Disadvantages of Change Data Capture (CDC):

- Complexity in managing data consistency when capturing and applying changes in real-time.

- May require specific software or infrastructure to implement.

5. Data Virtualization and Data Federation

Data virtualization and data federation allow organizations to access data from multiple sources without physically moving it into a centralized system. Data virtualization provides a unified view of disparate data sources by creating virtual representations of the data, while data federation involves querying multiple databases or systems and presenting the results as if they came from a single source.

Advantages of Data Virtualization and Data Federation:

- Provides real-time access to data without the need for replication or storage in a central location.

- Reduces data redundancy by allowing data to remain in its original location.

- Speeds up the time to value by avoiding the need for data movement.

Disadvantages of Data Virtualization and Data Federation:

- Performance can be affected when querying large volumes of data from multiple systems.

- Data consistency and quality issues may arise due to the lack of a centralized repository.

6. Master Data Management (MDM)

Master Data Management (MDM) is a method of ensuring that critical data—such as customer, product, or financial data—is consistent, accurate, and up-to-date across an organization. MDM creates a single “master” record for key entities, enabling organizations to maintain a single source of truth.

Advantages of Master Data Management (MDM):

- Improves data quality by standardizing and de-duplicating key data across systems.

- Enhances data governance and compliance by maintaining a single source of truth.

- Supports better decision-making by ensuring accurate, consistent data is available.

Disadvantages of Master Data Management (MDM):

- Complex to implement and maintain, requiring significant investment in infrastructure and governance processes.

- Requires alignment between multiple departments to ensure data consistency.

7. API-Based Integration

API-based integration leverages application programming interfaces (APIs) to connect different systems, allowing them to share data in real-time. APIs can be used to expose specific functionalities or data from one system to another, making integration easier and more flexible.

Advantages of API-Based Integration:

- Facilitates real-time data integration between systems.

- Provides flexibility to integrate cloud-based and on-premises systems.

- Enables modular and scalable integration as APIs can be easily modified or expanded.

Disadvantages of API-Based Integration:

- Requires robust security and authentication mechanisms to ensure data protection.

- Complex to manage and monitor multiple API connections, especially in large-scale environments.

8. Cloud-Based Data Integration

Cloud-based data integration leverages cloud platforms to connect data across various cloud and on-premises systems. It offers scalable and flexible solutions for organizations that are adopting hybrid or multi-cloud environments.

Advantages of Cloud-Based Data Integration:

- Scalable and flexible, allowing organizations to handle increasing volumes of data.

- Reduces infrastructure costs and complexity by leveraging cloud resources.

- Supports both real-time and batch data integration.

Disadvantages of Cloud-Based Data Integration:

- Data security and privacy concerns when moving sensitive data to the cloud.

- Potential for vendor lock-in with specific cloud platforms.

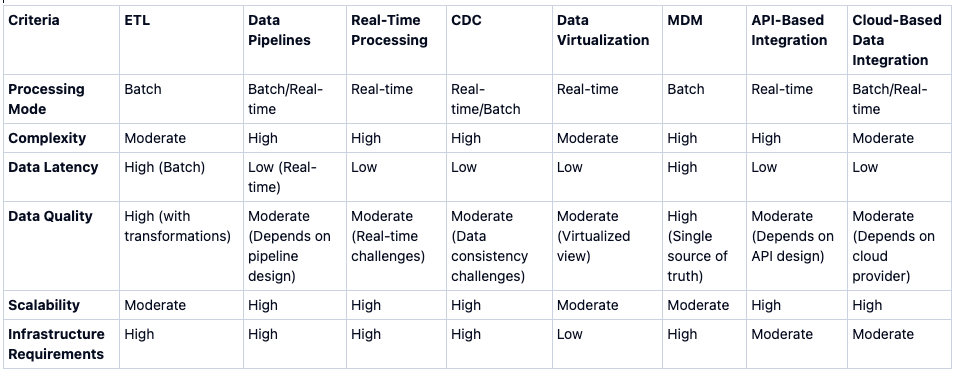

Comparative Analysis of Data Integration Approaches

When choosing a data integration approach, organizations must consider several factors, including their data sources, infrastructure, and business needs. Below is a comparative analysis of the key approaches based on specific criteria:

Key Considerations When Choosing an Integration Approach

Choosing the right data integration approach depends on a variety of factors, including:

- Data Volume and Velocity: For high-volume, fast-moving data, real-time processing or data pipelines may be the best approach. For smaller, batch-oriented workloads, ETL could be sufficient.

- Complexity of Data Transformation: If the data requires significant transformation, enrichment, or cleansing, an ETL approach may be more appropriate, while real-time processing might be better for minimal transformations.

- Data Quality Requirements: MDM solutions should be considered if maintaining high-quality master data across the organization is a priority.

- Cloud vs. On-Premises: Cloud-based data integration can provide flexibility and scalability, but security and compliance should be carefully considered, especially when handling sensitive data.

- Real-Time Requirements: If immediate data availability is critical, real-time processing or CDC may be required.

There is no one-size-fits-all solution when it comes to enterprise data integration. Organizations need to carefully evaluate their data sources, business needs, and infrastructure to choose the right approach. ETL remains a robust option for batch processing, while data pipelines and real-time processing are essential for modern, high-velocity data environments. Additionally, emerging approaches like CDC and API-based integration are becoming increasingly relevant for organizations that need flexibility and scalability.

Ultimately, the right integration strategy will depend on an organization’s data architecture, governance requirements, and long-term business goals. By understanding the strengths and weaknesses of each integration approach, enterprises can build a cohesive and future-proof data integration strategy that meets their unique needs.

We know that every organization faces unique challenges and opportunities. At Initus, we understand that a one-size-fits-all approach to integrations doesn’t work. That’s why our team creates software integrations that can support AI-based solutions to address the specific needs of any sector.

Adaptability + Experience + Strategic Methodology. If you have an operational improvement challenge you want to overcome, contact us today.